Combating bias in the AI workplace

Catherine Tansey – Sep 13th, 2023

From recruiting, to upskilling, to inclusion and belonging, AI presents a whole new set of strategic and ethical challenges. This article explores the DEI leader's role in a brave new workplace.

Companies have been using AI-powered tools and algorithms for years now, but the arrival of generative AI tools, like ChatGPT, in the last year has intensified fears and debate over its ethics. Largely polarized, the conversation around AI today rebounds between claims it will liberate workers from tedious work and concerns it will render jobs obsolete.

From a DEI perspective, even more worrisome is AI’s potential to amplify bias and further entrench disparities for marginalized employees, already struggling to find equal footing in the workplace.

In this article we examine the challenges and opportunities for DEI leaders as they help businesses navigate this new frontier of workplace technology.

Without careful watch, AI is likely to deepen biases in recruiting

Job seekers today are already contending with AI in recruiting and hiring, though many may not know it.The EEOC estimates that 83% of employers today use AI-powered algorithms for talent acquisition in the form of chatbots, ATS, programs that analyze the candidate’s body language and speech patterns in video interviews, and more.

Despite its widespread adoption, AI tools have the potential to deepen bias and perpetuate disparities in the recruiting process when unchecked—which is only expected to intensify with generative AI. As organizations expand how they use AI in hiring and recruiting, DEI professionals will be part of ensuring AI tools produce equitable outcomes.

20% of Black adults who say racial bias in hiring is a problem believe AI being more widely used by employers would make the issue worse.

Since AI algorithms are built on existing data sets, they’re liable to replicate and amplify whatever the data contains but it’s difficult to discern what, exactly, that is.

“The use of neural network models means AI’s computations are often a black box, unknowable to AI designers and end users alike, which has implications for accountability and transparency when AI is used for decision making,” write the authors of Artificial Intelligence and the Future of Work: A Functional-Identity Perspective published in the journal Current Directions in Psychological Science.

An algorithm reviewing candidates looks for similarities between the current applicants and the data of high-performing employees in the same role. For positions in senior leadership or in an industry that’s male-dominated, like tech or finance, it’s highly likely that men would be overrepresented, causing the algorithm to prefer and recommend male applicants over others, which is what happened at Amazon. In 2018 Reuters reported that in 2017 Amazon scrapped the AI hiring tool it debuted in 2014 after determining that it systematically discriminated against women for technical roles.

Critics say AI tools are plagued by bias but a lack of oversight in AI is part of what makes regulating it a challenge, with little agreement on who is ultimately responsible. Software vendors are meant to be accountable for ensuring that a solution is unbiased, but “little, if any, independent oversight” exists. And external auditors aren’t a turnkey fix either; audits may not audit for intersectionality, don’t necessarily prove the product helps make a better hiring choice, and are often paid for by the company being audited, according to Hilke Schellmann’s reporting for MIT Technology Review.

What’s more, evaluators often don’t agree on what's required to show that AI is “unbiased” or “fair,” a direct reflection of the different personal beliefs of what unbiased or fair means, conclude Richard Landers and Tara Behrend in Auditing the AI auditors: A framework for evaluating fairness and bias in high stakes AI predictive models, published in American Psychologist.

Given AI’s penchant for amplifying existing biases and disparities, it’s no wonder Black Americans are more likely than other identity groups to express concern. A recent report by the Pew Research Center found that “20% of Black adults who say racial bias in hiring is a problem believe AI being more widely used by employers would make the issue worse, compared with about one-in-ten Hispanic, Asian or White adults.”

Regardless of the challenges ensuring unbiased products, it’s ultimately the company using the AI hiring tool that is responsible for its own hiring practices. “There's certainly no exemption to the civil rights laws because you engage in discrimination some high-tech way,” says chair of the Equal Employment Opportunity Commission Charlotte Burrows, as quoted in the Associated Press.

Takeaway: It's essential that DEI leaders bring a data-driven outlook to their recruiting efforts, ensuring that the connections between new technology and bias becomes clear.

Equitable access to AI upskilling and training opportunities

AI’s expansion in the workforce doesn’t necessarily mean the erasure of workers, but it will change the roles and responsibilities of a job, the way people work, and how teams communicate. Companies will have to reskill and upskill their workforces in order to remain competitive, and employees are expecting them to do so.

According to a recent report by Salesforce, more than 67% of employees expect their company to provide the training they need to best use generative AI, but 66% say their company does not currently provide learning opportunities.

From a DEI perspective, without a strategy in place to guarantee a diverse group of employees gains access to the skills needed to adapt to AI in the workplace, training has the potential to perpetuate disparities—especially considering the individuals whose jobs are most threatened by AI.

More than 67% of employees expect their company to provide the training they need to best use generative AI, but 66% say their company does not currently provide learning opportunities.

A report by The Hamilton project by the Brookings Institution finds that AI poses greater job security threats to Black and Hispanic workers than their White and Asian counterparts: a quarter of the Black workforce is employed in one of the 30 jobs with the highest risk score for automation, while 30% of Hispanic workers are.

Women face higher risk from AI, too. Clerical positions, for which women are overrepresented, are considered one of the roles most susceptible to automation by generative AI, according to a recent report from the International Labour Organization.

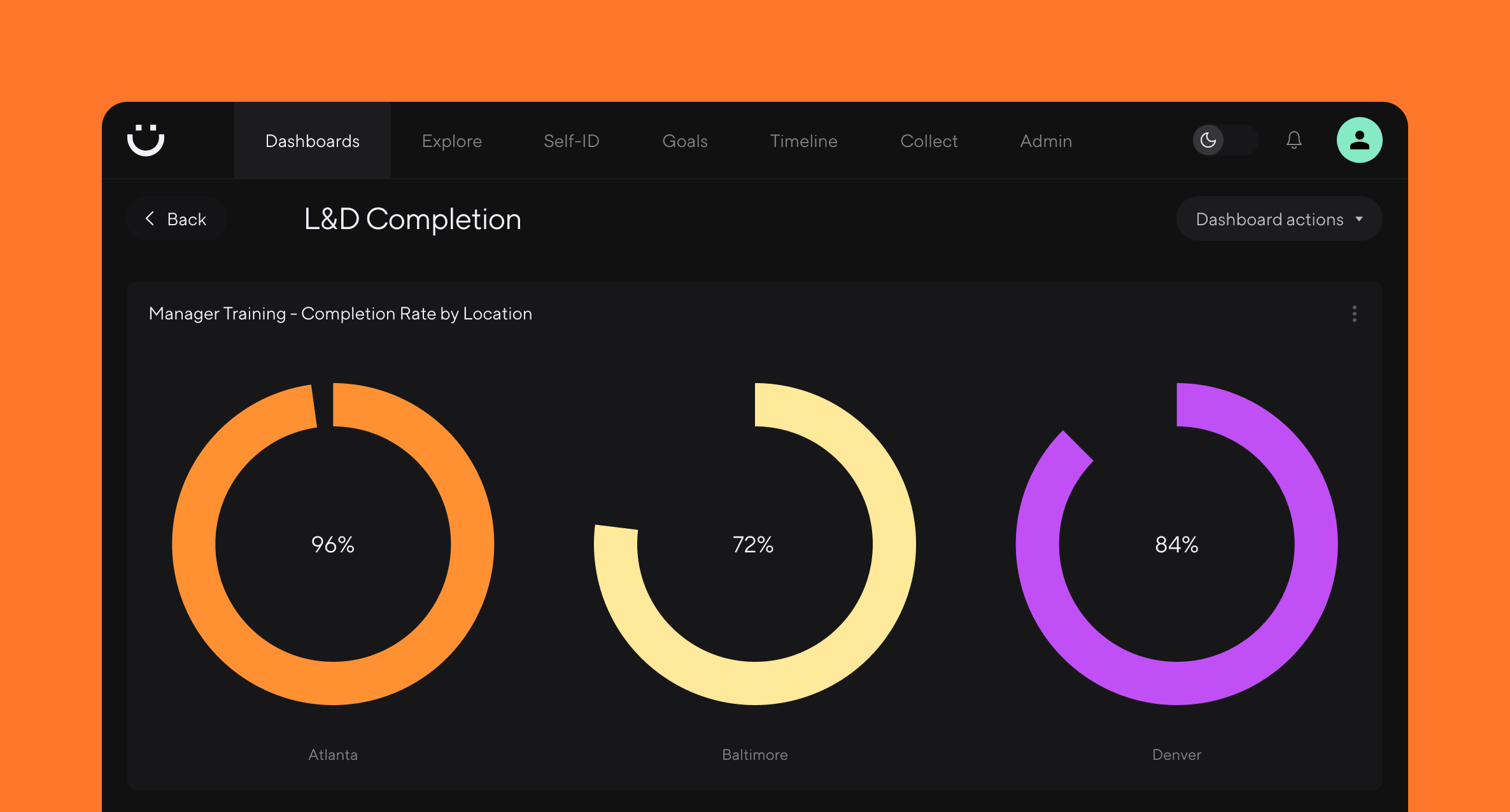

DEI professionals should work in tandem with L&D teams to create equal access to employee development and training opportunities. One way to do so is by bringing more transparency into the process. For example, posting opportunities publicly across the entire org so people can see what’s available—even if it’s for a different career tract, or they aren’t yet qualified—and publicizing the criteria for admission into a program or nomination-based opportunities are good places to start.

Takeaway: DEI leaders can and should play a key role in guiding upskilling policies, ensuring that no one is left behind as workplace tools change.

Inclusion and belonging look different in the AI workplace

AI is changing not only how work gets done but also how people feel about their work and their professional identity. While we know that AI is not going to take everyone’s job, it is possible that generative AI will so significantly alter the roles, responsibilities, and the work environment that employees find that they are essentially working in new fields.

Consider a loan consultant responsible for the complex financial analysis of loan decisions valued at millions of dollars. With time, their employer is likely to replace these once human-only tasks with an algorithm that’s automated and more precise, eliminating the need for the role, or much of it. Or think of a surgeon who is analyzed in real-time by AI-driven analysis which promotes patient outcomes and increases accuracy while operating.

AI is changing not only how work gets done but also how people feel about their work and their professional identity.

These fundamental changes to a role are poised to affect how individuals view their contributions to the workplace, the sense of belonging they feel, and the identity they derive from their work.

“Workers who lose significant aspects of their jobs, or their job roles, will face the greatest identity challenge,” write the authors of Artificial Intelligence and the Future of Work: A Functional-Identity Perspective. “How can they protect their self-esteem and achieve a sense of self-continuity and self-verification if the social self-categorizations enabling those functions no longer exist?” they ask—and how can their organization support them in doing so?

DEI practitioners will be on the hook to foster a culture of belonging in workforces where roles and responsibilities have drastically changed. This will be new ground for all of us, and DEI teams will have to pioneer creative ways to respond to the shifts in identity, belonging, and inclusion in the workplace.

Takeaway: DEI leaders must bring an intersectional outlook to questions of inclusion and belonging to understand which employee populations are being impacted—positively or negatively—by their new, non-human colleagues.

DEI is key to equitable outcomes in the age of AI

It’s impossible to project the ways in which AI will alter the workplace, but it’s clear these technologies are reshaping the roles and responsibilities of DEI in real time. Further, DEI professionals will be key to addressing the enormously consequential outcomes and effects of these tools.

In the AI-powered workplace, the need for clear, effective DEI leadership is both strategic and moral. They’ll be essential in guiding the use of AI in recruiting, where the potential for deepening an already-biased process is all but guaranteed without oversight. DEI pros will also be critical to ensuring all employees gain equal access to the upskilling and retraining that AI in the workplace demands, all while redefining what inclusion and belonging mean in a workplace transformed by AI.

These are just a few of the critical ways DEI will continue to matter in the AI workplace. Rather than threatening DEI, AI further cements the need for DEI in the workplace.

The workplace is changing. Dandi analytics give HR and DEI leaders the power to change with it. Book a demo to learn more.

More from the blog

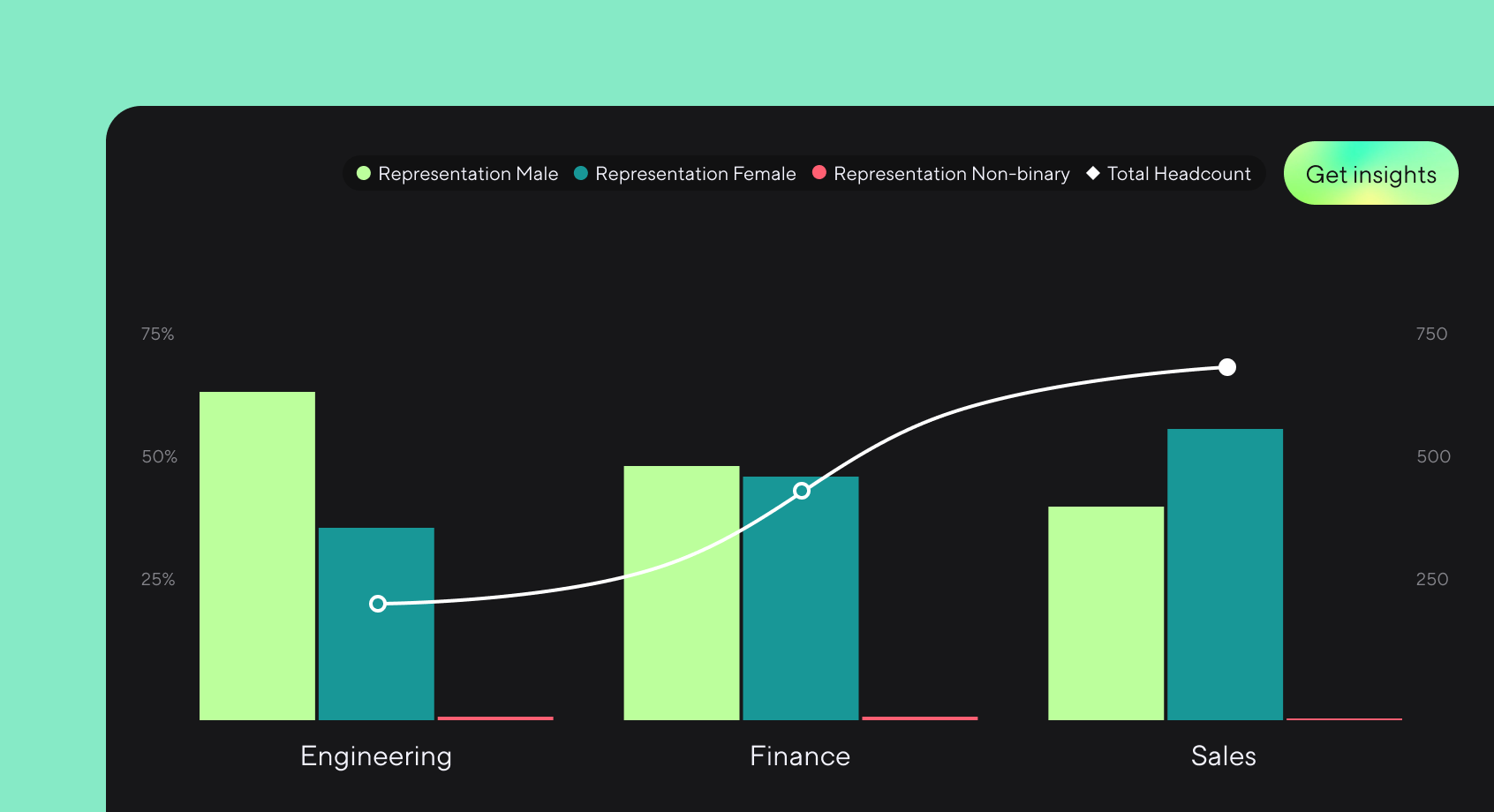

Announcing more powerful Dandi data visualizations

Team Dandi - Oct 23rd, 2024

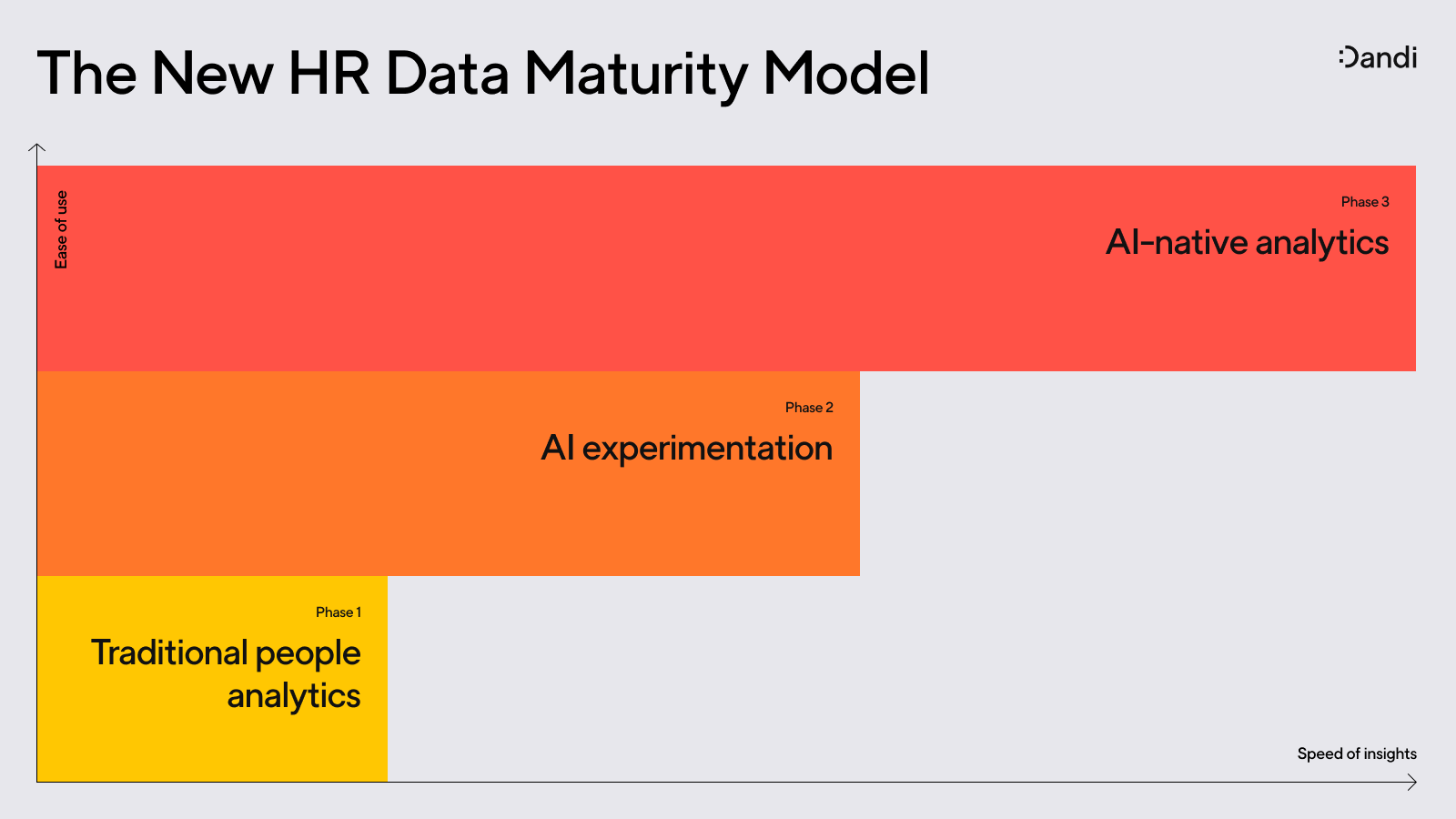

The New Maturity Model for HR Data

Catherine Tansey - Sep 5th, 2024

Buyer’s Guide: AI for HR Data

Catherine Tansey - Jul 24th, 2024

Powerful people insights, 3X faster

Team Dandi - Jun 18th, 2024

Dandi Insights: In-Person vs. Remote

Catherine Tansey - Jun 10th, 2024

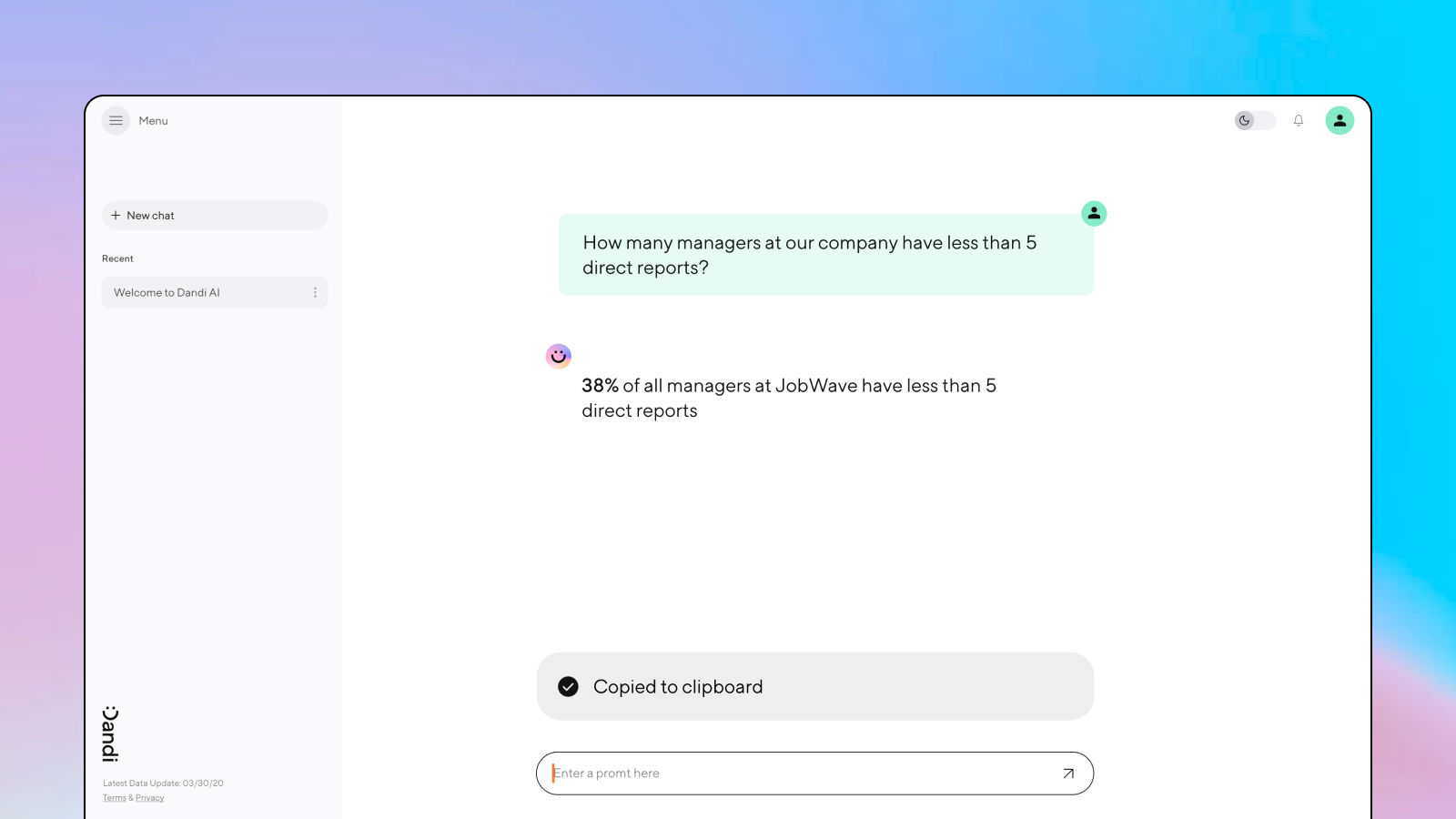

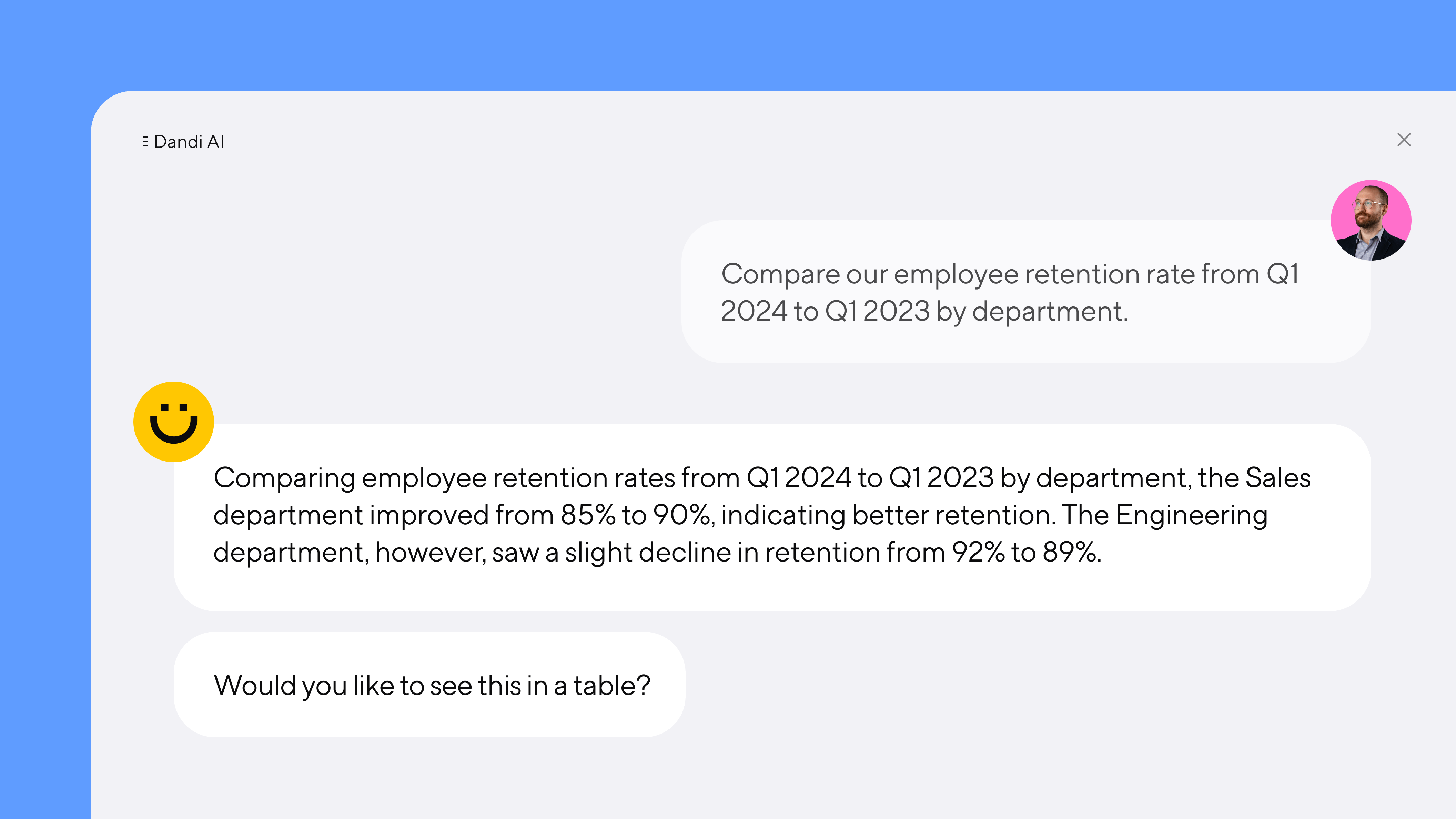

Introducing Dandi AI for HR Data

Team Dandi - May 22nd, 2024

5 essential talent and development dashboards

Catherine Tansey - May 1st, 2024

The people data compliance checklist

Catherine Tansey - Apr 17th, 2024

5 essential EX dashboards

Catherine Tansey - Apr 10th, 2024

Proven strategies for boosting engagement in self-ID campaigns

Catherine Tansey - Mar 27th, 2024